One Stop to SAP BODI/BODS

BODI- Business Objects Data Integrator or BODS- Business Objects Data Services is a GUI workspace that allows to create jobs that extracts data from heterogeneous sources, transforms that data using built-in transforms and functions to meet business requirements and then loads the data into a single datastore or data warehouse for further analysis.

SAP Business Objects Data Integrator can be used for both real-time and batch jobs.This article is your one stop guide to BODI.

One stop look into BODI Features

Trusted Information

- BusinessObjects Metadata Manager

- Data quality firewall

- Data profiling

- Data cleansing

- Data validation

- Data auditing

- End-to-end impact analysis

- End-to-end data lineage

IT Productivity

- BusinessObjects Composer

- Single development environment

- Secure, multi-user developer collaboration

- Complete end-to-end metadata analysis

Scalable Architecture

- Parallel processing

- Intelligent distributed processing

- Grid computing support

- Broad source and target support

BO Data Integrator Architecture: Designer, Repository and Server

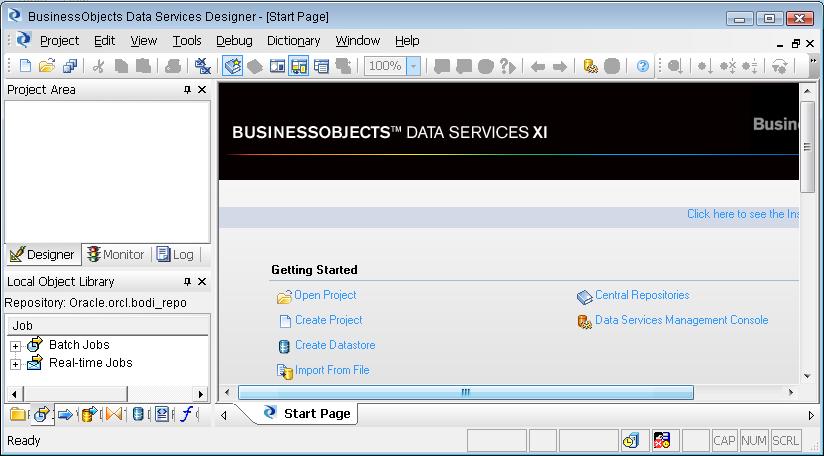

1. Designer - Designer is used to create datastores and data mappings, to manage metadata and to manually execute jobs.

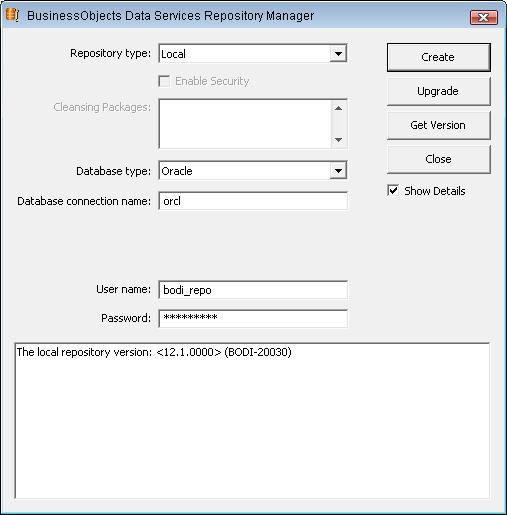

2. Repository - Set of tables in RDBMS that holds the user-created and predefined system objects, source target metadata and transformation rules.There are three types of Repositories as given below:

- Local repository - This stores source, target metadata definitions and transformation rules.

- Central Repository - This is an optional component used to support multi-user development environment. It provides shared library with check in and out objects facility.

- Profiler Repository - Store information that is used to determine the quality of data.

3. DI Engines and Job Server - DI Job Server retrieves the job information from its associated Repository and starts an engine to process the job. DI Engine extracts data from multiple heterogeneous sources, performs complex data transformations and loads data in target using parallel pipelining and in-memory data transformations to deliver high data throughput and scalability.

DI Job Server is like Informatica Integration Service which is associated with its corresponding Informatica metadata Repository that holds the transformation rules to move data from source to target.

Note that each Repository is associated with one or more DI Job Servers.

4. Access Server - This is used for DI Real-time service jobs. This Server controls the XML message passing between the source and target nodes in Real-Time jobs.

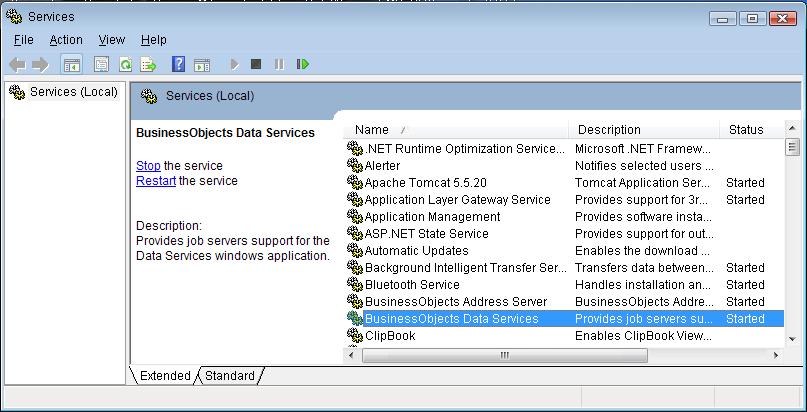

The Windows Services Name- Business Objects Data Services.

Service Names-

- al_Designer

- al_jobserver

- al_jobservice

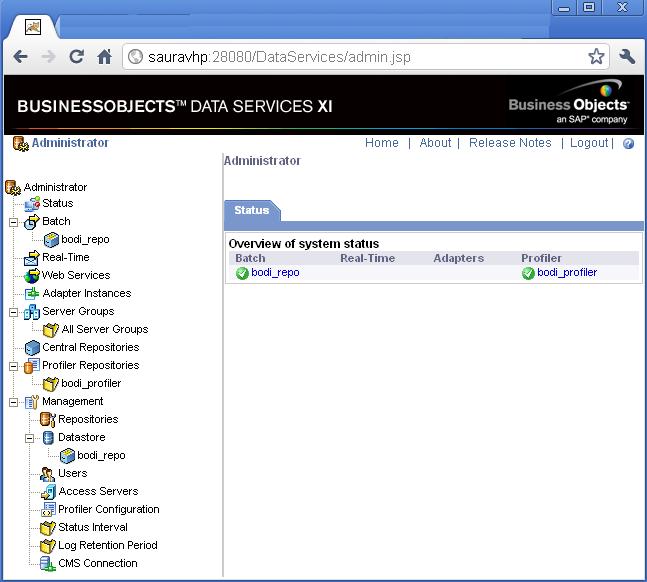

BODI Management Console

This provides browser based access to administration and reporting features. Tomcat servlet engine, Data Integrator Web Server is used to support browser access.

The BODI/BODS Administrative Console provides access to the following features:

1. Administrator: Administer DI resources includes access to following functionality.

- Scheduling, Monitoring and Executing batch Jobs.

- Configuring, Starting and Stopping Real-Time Services.

- Configuring Job Server, Access Server and Repository usage.

- Configuring and managing Adapters.

- Managing Users and user permissions and roles.

- Publishing Batch Jobs and Real-Time services vis web services.

- Reporting on Metadata.

2. Auto Documentation : View, analyze and print graphical representations of all objects as depicted in DI Designer, including their relationships, properties and more.

3. Data Validation: Evaluate the reliability of the target data based on the validation rules created in the Batch Jobs in order to quickly review, assess and identify potential inconsistencies or errors in source data.

4. Impact and Lineage Analysis: Analyze end-to end impact and lineage for DI tables and columns, and BO objects such as universes, business views and reports.

5. Operational Dashboard: View dashboards of DI execution statistics to see at a glance the status and performance of the Job execution for one or more repositories over a given time period.

BODI Management Tools

This provides the administration and management of DI Repository and Job Server.

1. Repository Manager: Create, Upgrade and Check the versions of Local, Central and Profiler repositories.

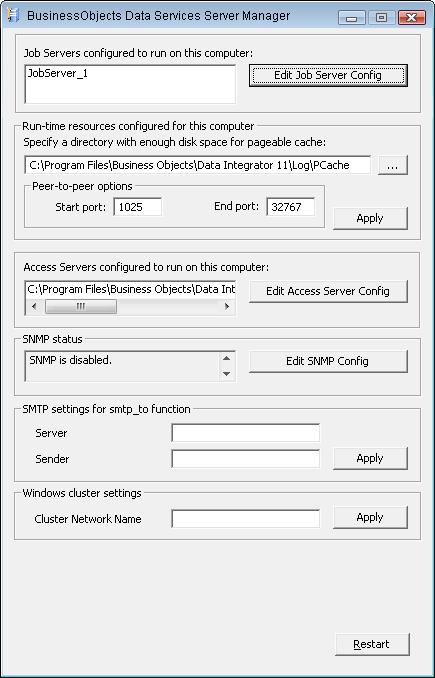

2. Server manager: Add, Delete or Edit the properties of Job Servers. It is automatically installed on each computer on which we install a Job Server. The Server Manager defines the links between Job Servers and Repository. We can link multiple Job Servers on different machines to a single repository (for load balancing) or each Job Server to multiple Repositories (with one default) to support individual repositories e.g. Test Environment, Production Environment.

Data Integrator Objects

Data Integrator provides a variety of Objects to use when building ETL applications. In DI all entities we add, define, modify or work with are Objects. Some of the most frequently used Objects are:

- Projects

- Jobs

- Work flows

- Data flows

- Transforms

- Scripts

Every Object is either Re-Usable or Single-Use. The only Single-Use Object in BODI is the Project.We cannot copy single-use objects. Most objects created in DI are available for re-use. Re-usable objects are globally accessible.

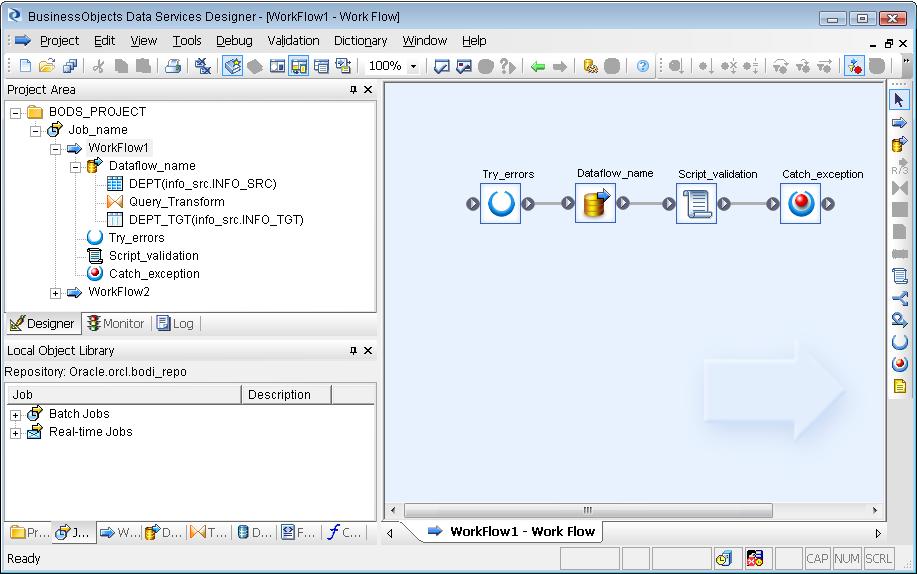

1. Projects: A Project is the highest-level object in Designer. A Project is a single-use objects that allows us to group and organize Jobs in Designer.

Only one project can be open and visible in the Project Area at a time. It is same like a Folders in PowerCenter Informatica.

2. Jobs: Jobs are composed of work flows and/or data flows. A job is the smallest unit of work that can Schedule independently for Execution. Jobs must be associated with a Project before they can be Executed in the Project Area of DI Designer. It is same like Workflows in Informatica.

3. Workflows: A Work flow is the incorporation of several Data flows into a sequence. A Work flow orders Data flows and the operations that support them.

It also defines the inter dependencies between data flows. Work flows can be used to define strategies for error handling or to define conditions for running the Data flows. A workflow is optional. It can be treated like Worklets in Informatica.

4. Dataflows: A Data flow is the process by which source data is transformed into target data. It is similar to Mappings in Informatica.

5. Transforms: Transforms are the in built transformation objects available in DI for transforming source data as per business rules. e.g. Case, Merge. It is similar to Transformations in Informatica. A good number of transformation sets are available in DI including some Data quality transformations also. A list of the transformations available at a glance.

6. Script: A Script is a single-use object that is used to call functions and assign values in a workflow. To apply decision-making and branch logic to work flows DI scripting language is used.

Defining Source and Target Metadata

To define data movement requirements in BODI we must import source and target metadata.

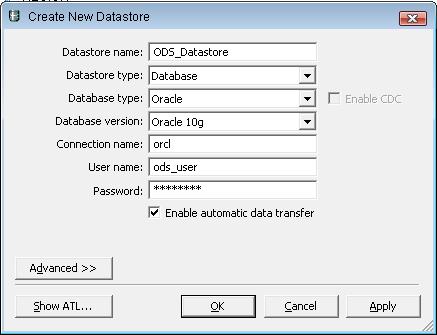

Datastores represent connections between Data Integrator and Relational Databases or Application Databases. Through the datastore connection DI can import the metadata from the data source. DI uses these datastores to read data from source tables or load data to target tables.

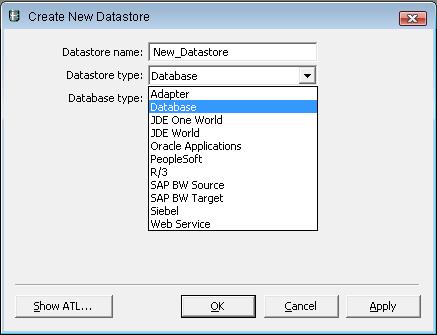

There are three kinds of Datastores namely Database Datastores (RDBMS), Application Datastores (ERP) and Adapter Datastores (3rd Party applications).

To create a Datastore:

In the Datastores tab of the Local Object Library, right click on the Datastores pane and select New and then enter the datastore name and database credentials. Leave the Enable automatic data transfer check box selected.

Next to import table definitions or stored procedures right click on the datastore name and select Import By Name and then enter the type and name to import.

To edit the table definition double click the table and modify accordingly.

Defining File formats for flat files

File Formats are connections to flat files in the same way that datastores are connections to databases. The Local Object Library stores file format templates that are used to define specific file formats as sources and targets in dataflows.

There are three types of file format objects namely Delimited format, Fixed Width format and SAP R/3 format (pre defined Transport_Format).

The file format editor is used to set properties of the source/target files. The editor has three working areas: Property Value, Column Attributes and Data Preview.

To create a file format from an existing file format, just right-click on the existing one and select Replicate