Auditing in SAP Data Services

A proper data reconciliation process must be in place in any data Extraction-Transformation-Load (ETL) process. A successful reconciliation process should only indicate whether or not the data is correct. But data reconciliation is not easy. Fortunately for us, Data Services (BODS) provides an in-built data reconciliation feature called Auditing. Auditing is a way to ensure that a dataflow loads correct data into the target warehouse. Let's see how...

Audit objects are used to collect run time audit statistics about the objects within a data flow. Using the Audit feature we can collect statistics about data read into a job, processed by various transforms, and loaded into targets.

We define audit rules to determine if the correct data is processed. Also in the event of audit rule failure there is a provision to generate notification of audit failures.

Auditing stores these statistics in the repository for further future analysis. In event of any mismatch in source/target row counts or sum of measures notification can be set, to highlight the audit failure for our immediate action like reload, identify incorrect source data etc.

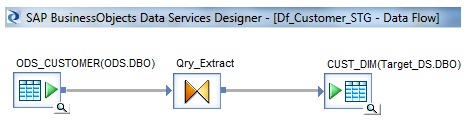

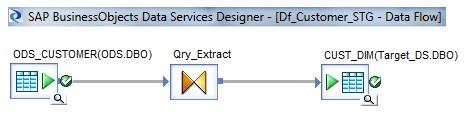

Now let us see how easy it is to enable Audit option in Data Services. Find below a sample dataflow on which we will Audit the count of Source and Target record count and also will verify the HASH value of character datatype columns between Source and Target.

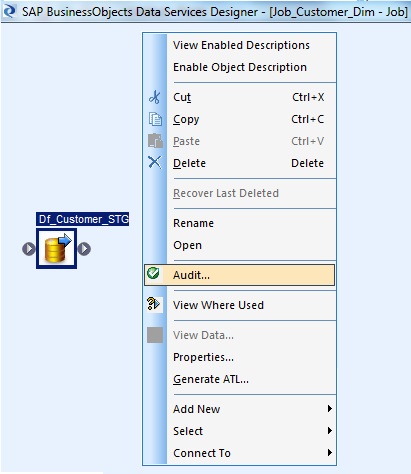

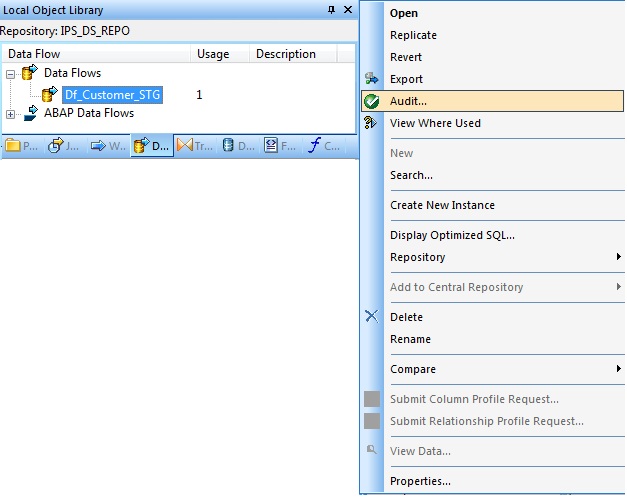

We can enable the Audit option property of the dataflow either by right-click the Dataflow in Workspace or from the Local Object Library.

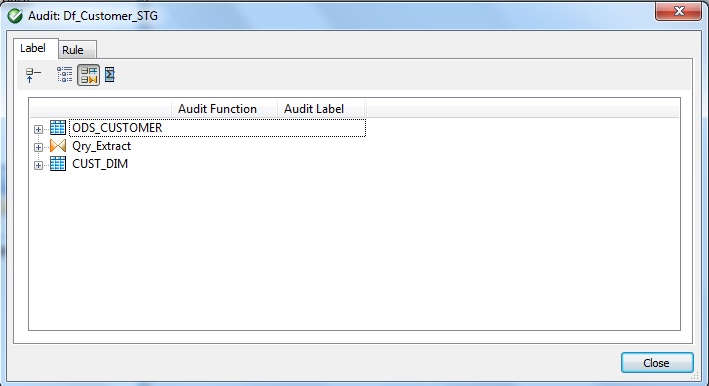

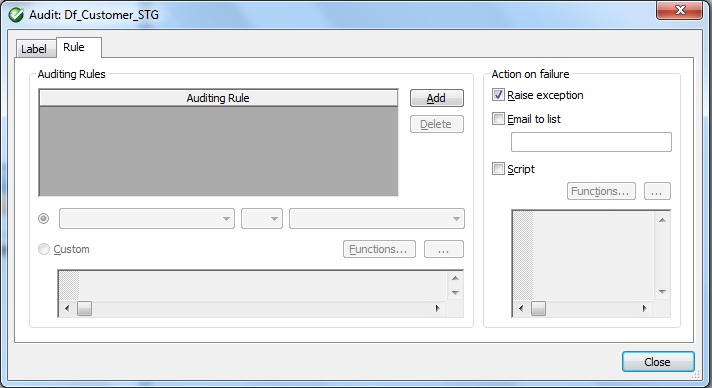

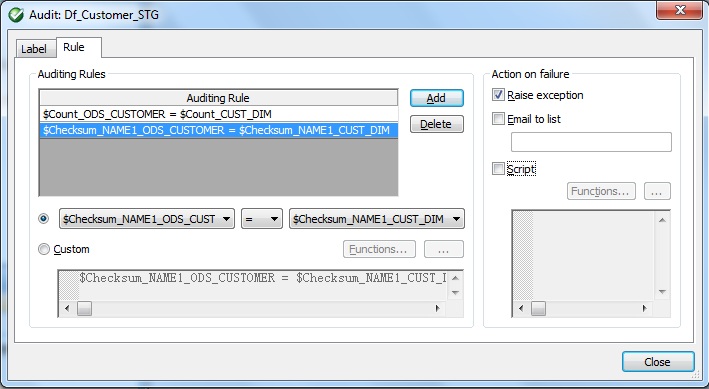

On selection of Audit, we have two tabs namely Label and Rule which is described in details below.

Auditing Features

Following are the various available features of auditing:

<ul>

- Define Audit Points to collect run-time statistics about the data.

E.g. Number of rows extracted from Source, Processed by Transforms, Loaded into Targets

- Define Audit Rules for the audit statistics to ensure expected data at the audit points in the dataflow.

- Generate run-time notification for the audit rule that fails i.e. Action on failure and the values of the audit statistics for which the rule failed.

- Display Audit Statistics after the job execution to identify the object/transform that processed incorrect data in the dataflow.

Auditing Objects in a Dataflow

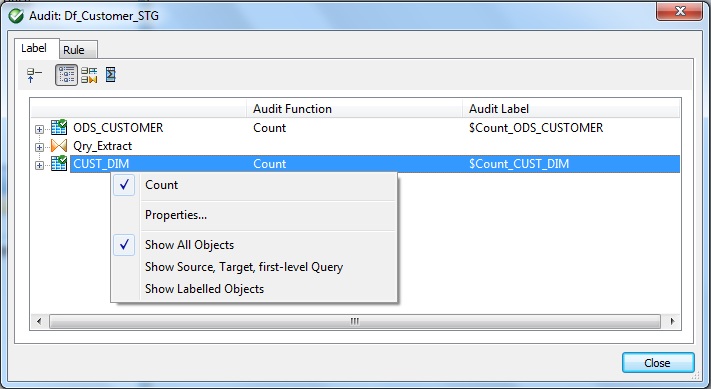

- Audit Point – This is the object in a dataflow where audit statistics is collected. i.e. Source, Transform or Target.

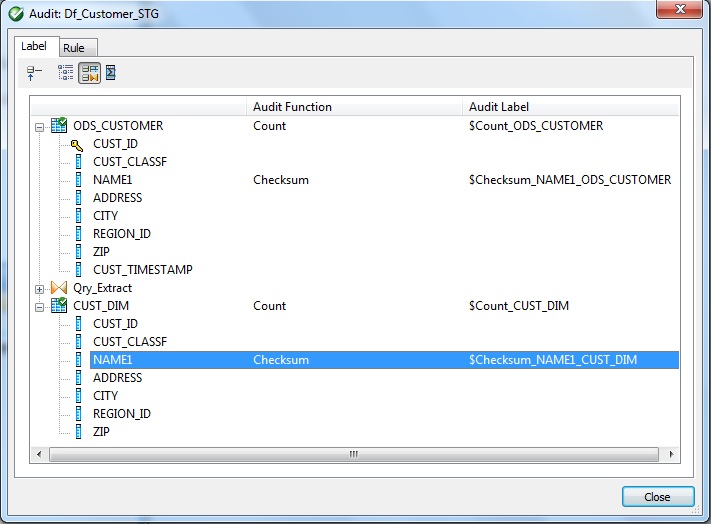

- Audit Function – Defines the collects audit statistics for a table, output schema or column. E.g. Count, Sum, Average, Checksum

- Audit Label – This is a unique name generated by BODS for each audit function that is defined on an audit point to collect the audit statistics.

- Audit Rule – A Boolean expression which uses audit labels to verify a job.

- Action on Audit Failure – Ways to generate notification for audit rules failure.

Audit Labels

The software generates a unique name for each audit function that we define on an audit point and are editable.

If the audit point is on a table or output schema for the audit function Count, the software generates two labels namely $Count_objectname and $CountError_objectname

If the audit point is on a column, the software generates an audit label namely $auditfunction_objectname

If the audit point is in an embedded data flow, the labels generated are namely $Count_objectname_embeddedDFname, $CountError_objectname_embeddedDFname and $auditfunction_objectname_embeddedDFname

Audit Functions

There are four Audit Functions available in BODS.

- COUNT function helps to get the audit information on a table or output schema. The default datatype is INTEGER.

- SUM function helps to generate audit information like summation of measure type columns. E.g. Revenue. Accepted datatypes are INTEGER, DECIMAL, DOUBLE, REAL.

- AVERAGE function helps to generate audit information like average of measure type columns. E.g. Profit Margin. Accepted datatypes are INTEGER, DECIMAL, DOUBLE, REAL.

- CHECKSUM function helps to audit based on the hash values generated for VARCHAR datatype columns. The order of rows is important for the result of CHECKSUM function.

Error Count Statistics

BODS collects two types of statistics when we use a Count audit function. Good row count for rows processed without any error. Error row count for rows that the job could not process but ignores those rows to continue processing. When we specify the Use overflow file option in the Source or Target Editor we can handle the error rows.

Let us now see how to define the Audit Points and the Audit Functions. We can also modify the default Audit Labels generated by Data Services if necessary.

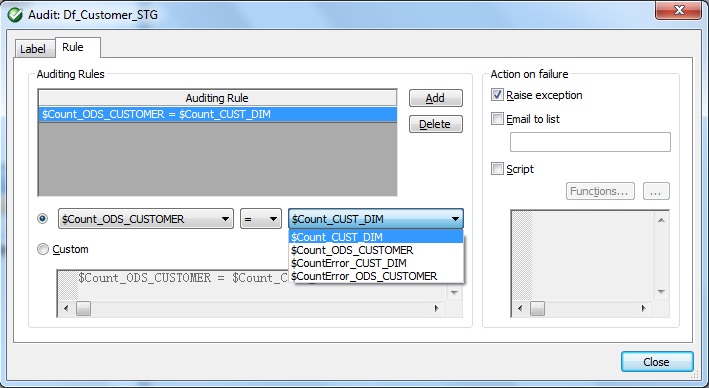

Audit Rule

An Audit Rule is a Boolean expression which consists of a Left-Hand-Side (LHS), a Boolean operator, and a Right-Hand-Side (RHS). The LHS can be a single audit or multiple audit labels that form an expression with one or more mathematical operators, or a function with audit labels as parameters. The RHS can also be a single or multiple audit labels that form an expression with one or more mathematical operators, a function with audit labels as parameters, or a constant. Examples:

The following Boolean expressions are examples of audit rules:

- $Count_ODS_CUSTOMER = $Count_DW_CUSTOMER

- $Sum_CALLS_PREPAID + $Sum_CALLS_POSTPAID = $Sum_DW_CALLS

- round($Avg_CALL_TIME) >= 30

Audit Notification

There are three types of Actions available for Notification in the event of an Audit Failure namely Email to list, Script and Raise exception. We can select either all three actions or can choose any combination of the actions. Find below the order these actions are executed in the event when all the actions are selected as a part of notification for audit failure.

- Email to list — BODS sends a notification of which audit rule failed to the email addresses mentioned for this option.

- Script — BODS executes the custom script mentioned for this option in the event of Audit rule failure.

- Raise exception — The job fails if an Audit rule fails, and the error log shows which audit rule failed. The job stops at the first audit rule that fails. This action is the default. We can continue with the job execution if we place the audit exception in a try/catch block.

We need to the define the Audit Rules related to the Audit Points or Audit Labels. Based on the success or failure of this rule we will generate error message.

Viewing Audit Results

We can find the audit status in the Job Monitor Log, when we set Audit Trace to Yes on the Trace tab in the Execution Properties window of the Job. We can see messages for audit rules that passed and failed. If the audit rule fails, the places that display audit information depends on the Action on failure option that was selected.

- When Raise exception action is selected and an audit rule fails the Job Error Log and the Metadata Reports shows the rule that failed.

- In case of Email to list we get notified of audit rule failure via Email message. Audit rule statistics are also available in the Metadata Reports.

- For failure action type Script the custom script is executed and the audit statistics can be viewed from the Metadata Reports.

Metadata Reports

We can view passed and failed audit rules result in the metadata reports. We can look at the Audit Status column in the Data Flow Execution Statistics reports of the Metadata Report tool. This Audit Status column has the following values:

- Not Audited

- Passed — All audit rules succeeded. This value is a link to the Auditing Details report which shows the audit rules and values of the audit labels.

- Information Collected — This status occurs when we define audit labels to collect statistics but we do not define audit rules. This value is a link to the Auditing Details report which shows the values of the audit labels.

- Failed — Audit rule failed. This value is a link to the Auditing Details report which shows the rule that failed and values of the audit labels.

Notes

- An audit label can become invalid if we delete the audit label in an embedded data flow that the parent data flow has enabled.

- An audit label can become invalid if we delete or rename an object that had an audit point defined on it.

- We can edit the audit label name while creating the audit function and before creating an audit rule that uses the label.

- If we edit the label name after using it in an audit rule, the audit rule does not automatically use the new name. We must redefine the rule with the new label name.

- If we define multiple rules in a data flow, all rules must succeed or the audit fails.

- Auditing is disabled when you run a job with the debugger.

- We cannot audit NRDM schemas or real-time jobs.

- We cannot audit within an ABAP Dataflow, but we can audit the output of an ABAP Dataflow.

- If we use the CHECKSUM audit function in a job that normally executes in parallel, the software disables the DOP for the whole data flow. The order of rows is important for the result of CHECKSUM, and DOP processes the rows in a different order than in the source.

- We can audit the number of rows loaded using bulkload dataflow only for those that uses the Oracle API method.

- While executing the job, the Enable auditing option in the Execution Properties window is checked by default. If we do not want to collect audit statistics for the specific job execution clear the selection.

- If we clear the action Raise exception and an audit rule fails, the job will complete successfully and the audit does not write messages to the job log. We can view which rule failed in the Auditing Details report in the Metadata Reporting tool.

- If a pushdown_sql function is after an audit point, the software cannot execute it.

- If we add an audit point prior to an operation that is usually pushed down to the database server, performance might degrade because pushdown operations cannot occur after an audit point.

When we audit the output data of an object, the optimizer cannot pushdown operations after the audit point. Therefore, if the performance of a query that is pushed to the database server is more important than gathering audit statistics from the source, we should define the first audit point on the query or later in the data flow.

For example, suppose a query has a WHERE clause that is pushed to the database server that significantly reduces the amount of data that returns to the software. So we can define the first audit point on the query, rather than on the source, to obtain audit statistics on the query results.

Audit Result Logging

In case we want to capture or log the Audit Results to Database tables we can use the custom script as:

$Count_ODS_CUSTOMER = $Count_CUST_DIM

AND sql('Target_DS', 'insert into dbo.STG_RECON values (

\'ODS_CUSTOMER\',[ $Count_ODS_CUSTOMERM ],

\'CUST_DIM\',[ $Count_CUST_DIM ],

{ to_char( sysdate(), \'YYYY-MM-DD\' ) } )

') is NULL